5 Case Studies: The Week AI Stopped Advising and Started Doing

What happens when AI grows a pair of helping hands

Those of you who follow my wider interests know I've been deep in the AI space for three years now. This piece is a departure from the usual Rational Ground fare — no pandemic policy, no media criticism. Instead, it's about something that quietly changed how I work. If you're curious what AI actually looks like when it stops being a chatbot and starts being a coworker, read on. If not, regular programming resumes shortly. (visit my AI blog)

A colleague asked me last week: “What happened? We’ve had AI for three years. Why is everyone suddenly losing their minds?”

It’s a fair question. ChatGPT launched in November 2022. We’ve had GPT-4, Claude, Gemini — three years of frontier models that could write essays, analyze spreadsheets, draft marketing copy. I’ve used all of them, every day, since the beginning.

And here’s what three years of AI gave me: more work.

Not less. More. Better strategies I didn’t have time to implement. Brilliant analyses that sat in Google Docs. Content calendars that never got executed. Campaign optimizations I knew would work but couldn’t staff.

I was drowning in strategy docs. AI had become the world’s most productive consultant — endlessly generating recommendations I couldn’t act on. Like hiring McKinsey but having no employees to implement anything they said.

Then something changed. Not the models — they’re marginally better. What changed was this:

AI stopped advising. It started doing.

The Hands Problem

For three years, AI had a brain and a mouth but no hands. It could think and it could talk. It couldn’t do anything.

Ask it to write a fundraising creative? Beautiful. Now manually copy it into your campaign platform, upload the image, set the audience parameters, fill in 12 form fields, click submit. Repeat 24 times.

Ask it for a competitive analysis? Thorough. Now go pull the data yourself, build the dashboard yourself, cross-reference the findings yourself.

The thinking was free. The doing still cost the same.

What happened in the last few months is that AI got hands. Persistent memory across sessions. The ability to operate real software — browsers, databases, APIs, file systems. Sub-agents that spin up, do a job, and report back. Scheduled tasks that run at 2 AM while you sleep.

The model didn’t change. The operating model changed. AI went from a consultant who emails you a deck to a team member who ships the work.

Let me show you what that looks like in practice.

Case Study 1: The Creative Machine

The problem: I manage SMS/MMS fundraising campaigns for a nonprofit. Every campaign needs fresh creative — emotional copy, properly formatted, compliant with messaging regulations, uploaded to a specific dashboard with precise field requirements. A typical campaign needs 4-8 creatives, each manually entered.

The old way: I’d spend 5-6 hours per day writing copy, testing variations, uploading to the campaign platform. Every. Single. Day.

What AI does now — the full loop:

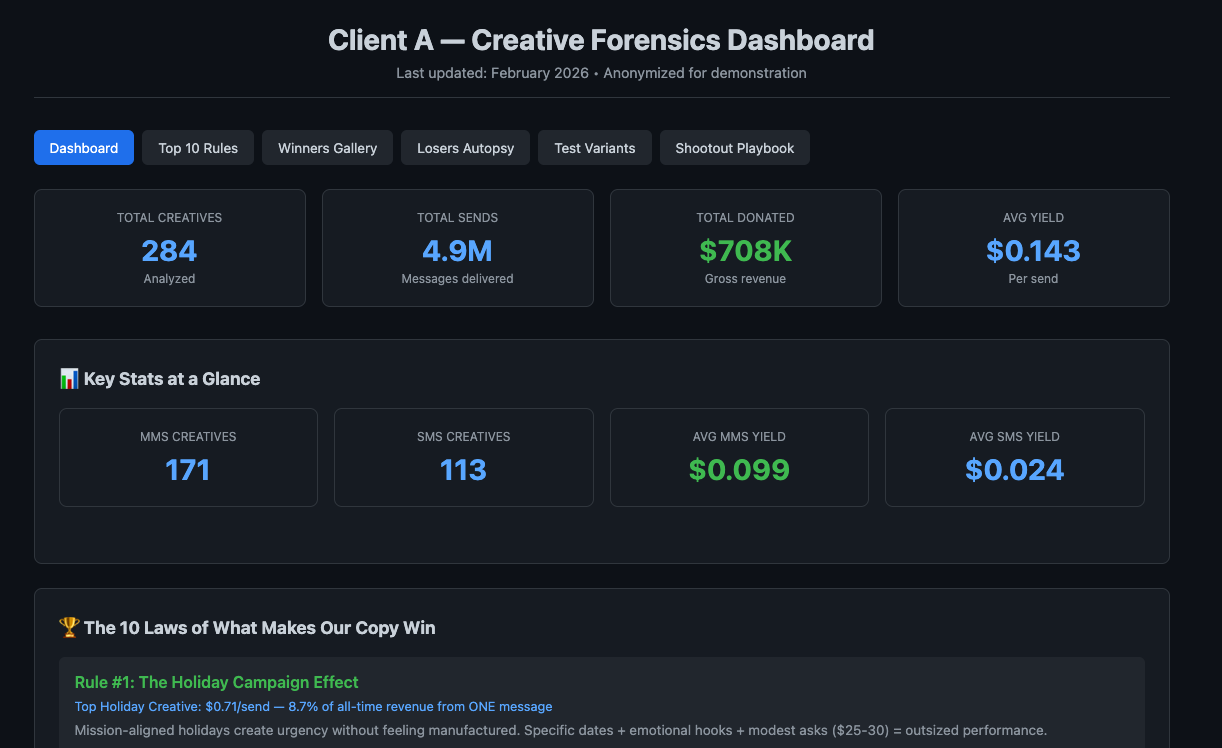

First, it ingested our entire creative history — hundreds of creatives, millions of sends, substantial donation revenue. Not just the numbers. It read every message, analyzed sentence structures, emotional triggers, formatting patterns, open rates, conversion rates, and yield per send. From that, it produced a forensic analysis: the “10 Laws of What Makes Our Copy Win.”

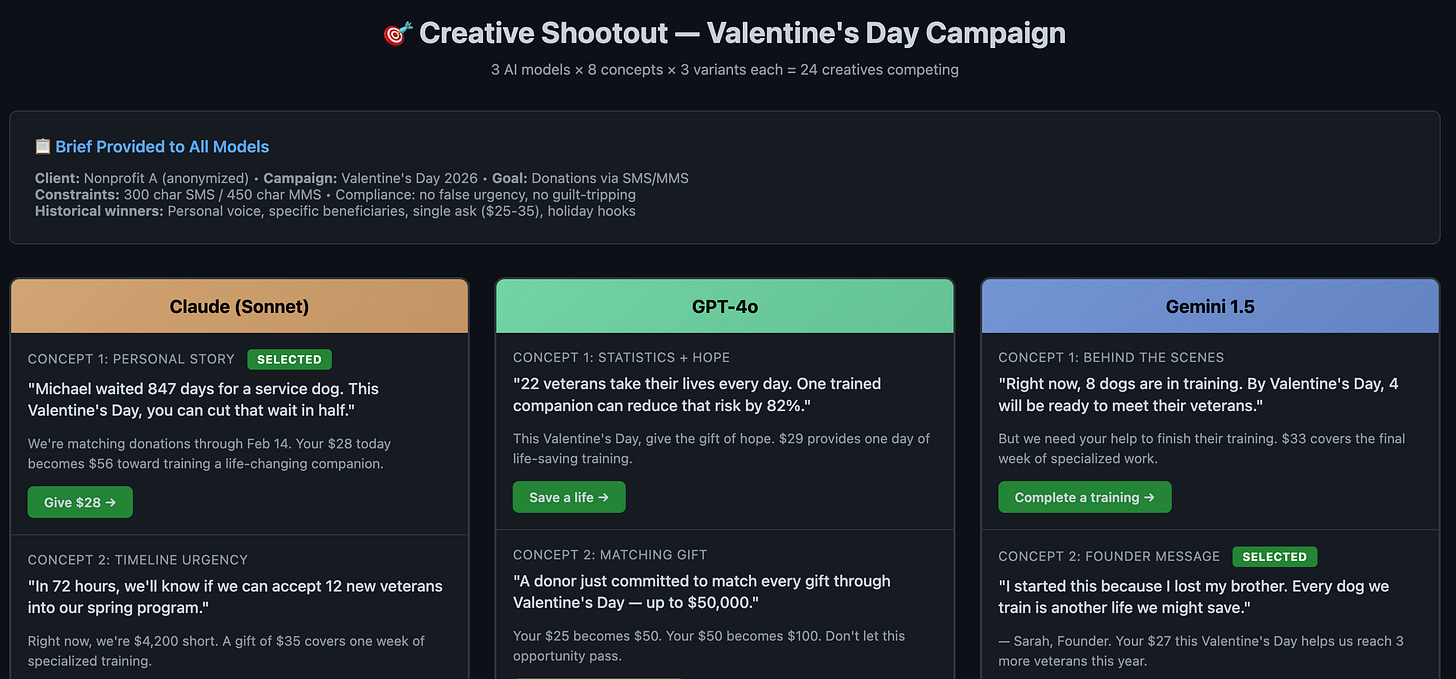

Then, when it was time for a Valentine’s Day campaign, it didn’t just brainstorm ideas. Three different AI models — Claude, GPT, and Gemini — competed in a “creative shootout.” Each got the same brief: our historical performance data, our compliance constraints, our donor demographics, our best-performing patterns. They produced 24 creatives across 8 concepts.

I picked 4 winners. Then the AI:

Downloaded fresh photos from the nonprofit’s website

Resized images to meet platform requirements (under 600KB)

Logged into the campaign dashboard

Selected the correct client from the dropdown

Filled in every form field — Creative ID, author, approval status

Uploaded images for MMS creatives

Pasted landing page copy into the WYSIWYG editor

Clicked submit. Four times.

Total time for the automated posting: 8 minutes 40 seconds. Zero human keystrokes on the dashboard.

The entire pipeline — from historical analysis to live campaign creatives sitting in the dashboard — ran with about 15 minutes of my input. Picking winners. Reviewing copy. Saying “go.”

Case Study 2: The Overnight Army

The problem: A new client needed a complete creative strategy — data analysis, competitive benchmarks, performance forensics, copy recommendations. The kind of deliverable that takes a senior analyst 2-3 full days.

What happened: At 9 PM, I gave my AI the raw campaign data and said “analyze this.” Then I went to bed.

By 6 AM, waiting for me:

A 46,000-word forensics document with “10 Laws of What Makes Copy Win” — specific to this client, derived from message-level analysis of every creative they’d ever run

An interactive HTML dashboard with sortable tables, charts, and filterable rules

A competitive shootout reference file

A growth strategy dashboard projecting revenue scenarios across 5 different optimization levers

The AI hadn’t just analyzed the data. It had caught something I would have missed: one creative that appeared to be a top performer was actually inflated by external vendor sends. When the AI segmented the data three ways — internal-only, all internal, and including externals — the “winner” evaporated. The real winners told a completely different story about what copy patterns drive donations.

Four sub-agents worked in parallel overnight. Each handled a different piece. They coordinated through shared files — one agent’s output became another agent’s input. By morning, the deliverable was more thorough than what I’d have produced in three days of focused work.

I reviewed it over breakfast. Made two edits. Shipped it.

Case Study 3: The Live Demo That Blew Up the Room

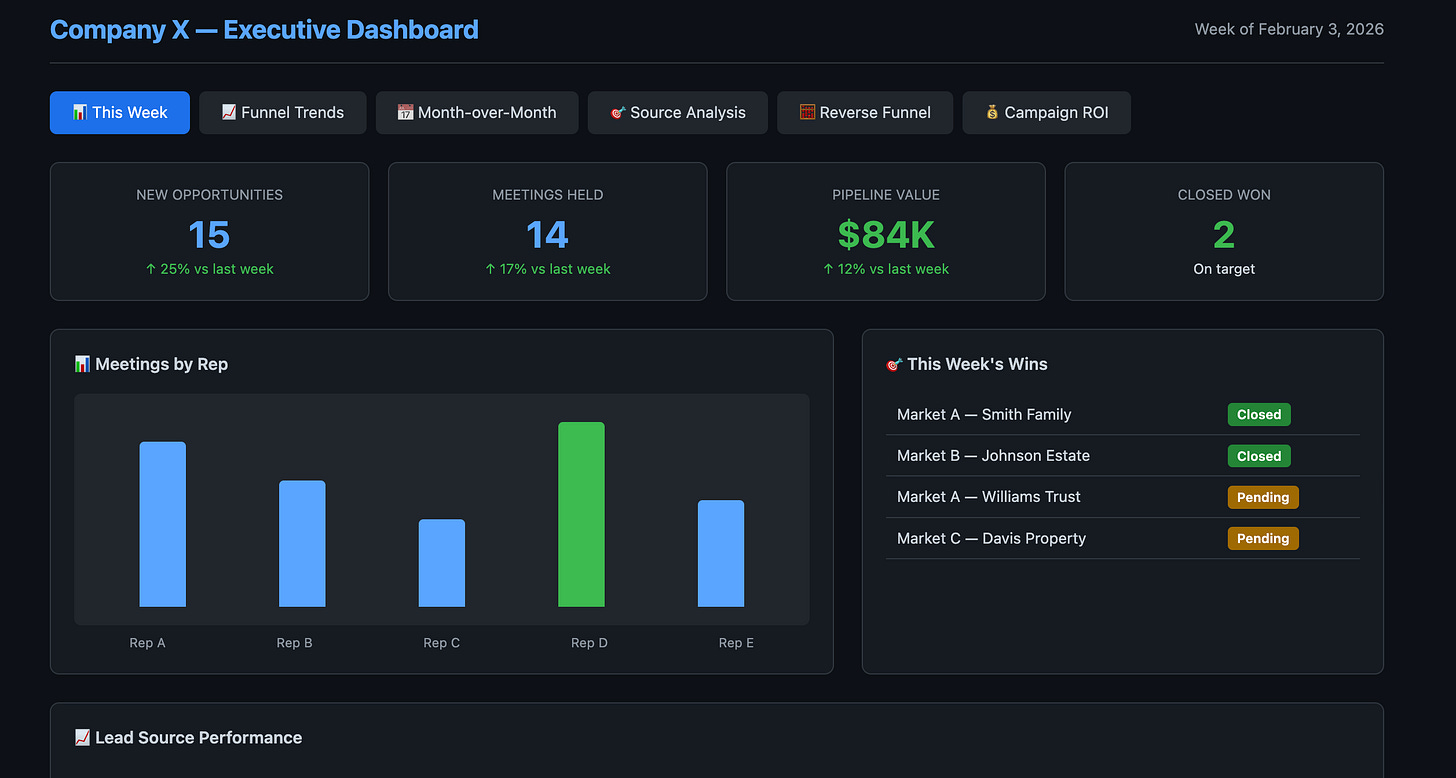

The problem: A real estate company running hundreds of leads per month through Salesforce had no quick way to get answers. “How many new opportunities this week?” required logging into Salesforce, navigating to reports, building a filter, waiting for it to run. Nobody bothered. Data sat unused.

The demo: I connected my AI to their Salesforce instance and dropped it into an iMessage group chat with four executives.

They started asking questions. Real questions, not tests:

“How many new opportunities this week?”

→ 14 new opps, broken down by market.

“What’s our top rep’s meeting attendance for January?”

→ 25 attended, 16 with real estate in play.

“Break down our lead sources by campaign.”

→ Paid search driving over half of wins, organic growing, and a brand new AI-powered search engine appearing as a lead source for the first time.

Each answer came back in seconds, formatted right in the chat. No dashboards to log into. No reports to build. Just ask.

Then, for their next morning’s executive meeting, the AI built a 6-tab interactive dashboard: weekly snapshot, lead funnel trends, month-over-month tracking, source analysis, a reverse-funnel calculator with editable assumptions, and campaign ROI by market.

The CEO called afterward. One word: “Blown away.”

The AI didn’t just answer questions. It identified the insight nobody had surfaced: their secondary market was converting at a third the rate of their primary — same ad spend, same lead volume, wildly different close rates. That’s a process problem, not a marketing problem. It changed how they allocated their next quarter’s budget.

Case Study 4: The Content Assembly Line

📦 This entire pipeline is available as an open-source skill. The "yt-content-engine" skill handles everything described below — transcript extraction, blog drafting, Substack publishing, Twitter threads, and HeyGen video generation. One URL in, five platforms out.

The problem: I publish a newsletter with 22,000 subscribers. I also maintain a presence on X/Twitter, and I want to start producing short-form video. Creating content for one platform was manageable. Creating it for four was impossible.

Especially when you have nine kids. My content strategy for three years was basically: pick the one platform I had 20 minutes for and ignore the rest.

What the AI built: A pipeline where one input produces everything.

It starts with a single URL — a YouTube video, a podcast, a source document. From there:

Transcript extraction — AI pulls and cleans the transcript

Blog draft — A 2,200-word article in my voice, with sourced citations (not hallucinated — it verifies links)

Substack publishing — Formats the HTML, compiles a clipboard-ready version, pastes it directly into the Substack editor via browser automation

X/Twitter thread — Condensed version with hooks optimized for engagement

Avatar video — The article gets split into script segments, fed to a HeyGen AI avatar (trained on my likeness), rendered as vertical video clips ready for social distribution

One URL in. Five platforms out.

The article I published last week — 2,200 words, 8 cited sources, formatted with images and pull quotes — went from “I should write about this” to “live on Substack” in under an hour. The majority of that hour was me reading and editing, not producing.

Previously, I’d have picked one platform and skipped the rest. Now the constraint isn’t production capacity. It’s editorial judgment.

Case Study 5: The Two-Minute Miracle

Not everything has to be dramatic. Sometimes the best demonstration is the smallest one.

Someone required wet signatures from me on five years of documents. Each document was a password-protected PDF — different passwords. The signature pages were buried deep inside multi-hundred-page files. I needed to find them, extract them, and print them.

The old way: open each PDF, enter the password, scroll through pages looking for signature lines, figure out how to extract just those pages, repeat five times, probably mess up and re-do at least two.

What I told my AI: “Unlock these PDFs, find the signature pages, and combine them into one printable document.”

Two minutes later: a single PDF on my desktop. Every signature page from all five years, in order, ready to print.

No drama. No dashboard. No pipeline. Just a maddening 45-minute task compressed into a sentence and a two-minute wait.

This is the one that convinced me. Not the creative pipelines or the live demos. This. Because everyone has a version of this task — the one that’s not hard enough to hire someone for, not important enough to build a system around, but just annoying enough to ruin 45 minutes of your afternoon three times a month.

AI handles it now. All of them. Every single one.

What Actually Changed

If you’ve read this far and you’re thinking “OK, but I’ve used ChatGPT and it’s not like this” — you’re right. It’s not. Here’s what’s different:

Persistence. My AI remembers context across days and weeks. It knows my clients, my preferences, my tools, my voice. I don’t re-explain anything. It picks up where we left off, every session.

Parallelism. It spawns sub-agents. While one is analyzing data, another is researching content, a third is downloading images, a fourth is drafting copy. They work simultaneously and coordinate through shared files.

Tool use. It operates real software. Browsers, databases, APIs, file systems, CLIs. It doesn’t describe what you should do in Salesforce — it queries Salesforce. It doesn’t suggest what to type in the form — it fills in the form.

Scheduling. Tasks run at 2 AM. Analyses complete overnight. Engagement sweeps fire twice a day. Morning briefings arrive before I’m out of bed.

Memory. It takes notes. It updates its own documentation. It learns from mistakes. When something breaks, it documents the fix so it doesn’t break again.

This isn’t a chatbot you type questions into. It’s an operating system for knowledge work.

The Question That Matters

My colleague’s question was “why is this different?” But that’s the wrong question.

The right question is: “What are you still doing manually that you shouldn’t be?”

Not “what could AI theoretically help with” — we’ve been answering that for three years. The question is what can you hand off. Entirely. Today.

For me, the answer turned out to be about 70% of my workday. The remaining 30% — the creative direction, the strategic judgment, the relationship management — is better because I actually have time and energy for it.

AI didn’t replace my job. It replaced the parts of my job that were preventing me from doing my job.

That’s what changed.

Justin Hart is the author of “Gone Viral: How Covid Drove the World Insane” (Regnery, 2022) and publishes Rational Ground, a newsletter with 22,000+ subscribers.

P.S. — I want to be transparent about how this article was made, because it kind of proves the point.

I described what I wanted — the colleague conversation, the thesis, the general shape. I told my AI to pick the best case studies from our work together. It chose five, drafted the article, and presented it for review.

Then I had to grab lunch before a 1:00 client meeting. So I asked it to chunk the draft into audio files and send them to me on Telegram. I listened to my own article — written by my AI, about my AI — through voice messages in a drive-through lane.

I sent my revision notes by voice memo while waiting for my food. The edits were done before I got back to the office.

This is my life now. And honestly? AI was driving the car too.

So Justin, what happens when people realize they don’t need you for writing anything at all? Are you and your substack becoming obsolete? I want real human thought, not perfection. And AI’s do make mistakes, it is not good to rely on this exclusively. As an assist to productivity, I can see that. But you are letting it replace you entirely.

I was getting into this and then realized... did he even write this? Did AI? Did the human being with (true) thoughts and emotions and background and history and HUMANITY write this?

"AI didn’t replace my job."

It did mine. Why do they need me to do transcription? Why do they need me to sit there for hours, listening to people speaking and doing the very difficult work of not just typing out every word that is said, but every, "um/uh", "like", "you know", etc.?

Except that's not the hardest part. As long as the audio is clear, that's the "easy" part. The hard part is taking normal speech and turning it into legible sentences that others can read and understand, without changing the meaning of what the people are saying, keeping the personality, as it were, of each person speaking, and somehow even managing to keep the tone.

That's the true work. It's as much art as it is work. A machine, a program can't do that.

But nobody cares about that, just like they don't care about the fake music, fake articles/books, fake movies, fake everything else this technology is churning out.

***

Again, it has been such a shock to see you promoting this stuff. I would have assumed you (of all people!) would have been on the front lines warning us and fighting against it.

I won't lie, this one hurts.